Focus vs Clipping Magic: Open Source vs Commercial Tool Comparison#

This page compares Focus, our open source background removal model, with Clipping Magic, a popular commercial tool. Each comparison includes quantitative error metrics (MAE and MGE) measured against ground truth alpha mattes, providing objective quality assessment across diverse image types.

About This Comparison

This is a technical quality comparison using ground truth alpha mattes and quantitative error metrics. Both tools represent different approaches to background removal.

- Focus is open source (Apache 2.0), runs locally, and prioritizes privacy

- Clipping Magic is a commercial web service with different pricing and features

- Error metrics (MAE, MGE) measure accuracy against ground truth data

- Lower error values indicate better accuracy (marked with ✓)

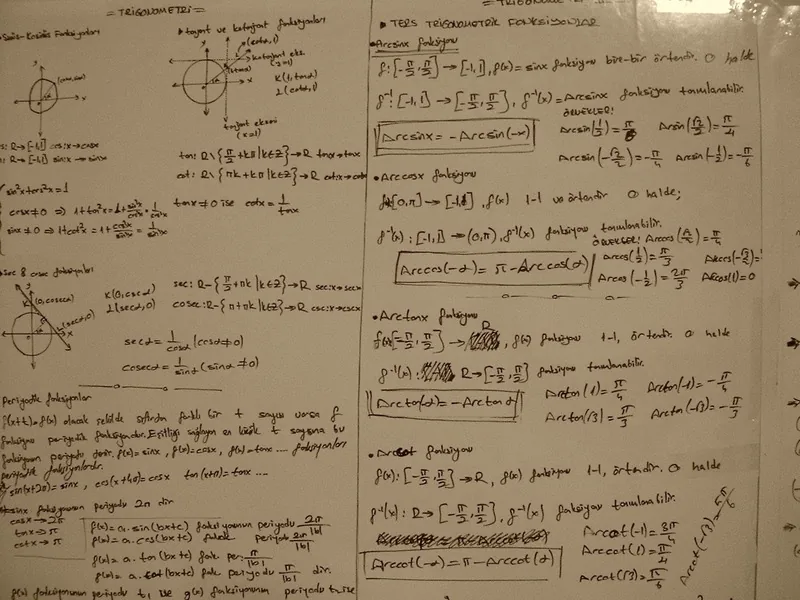

Understanding the Comparison#

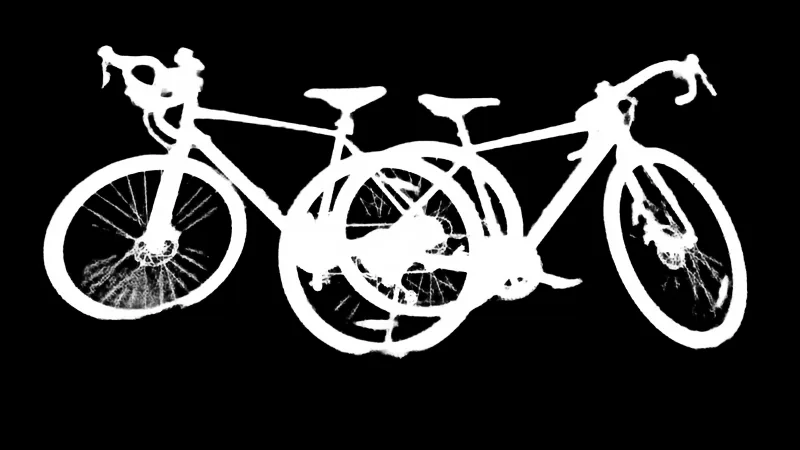

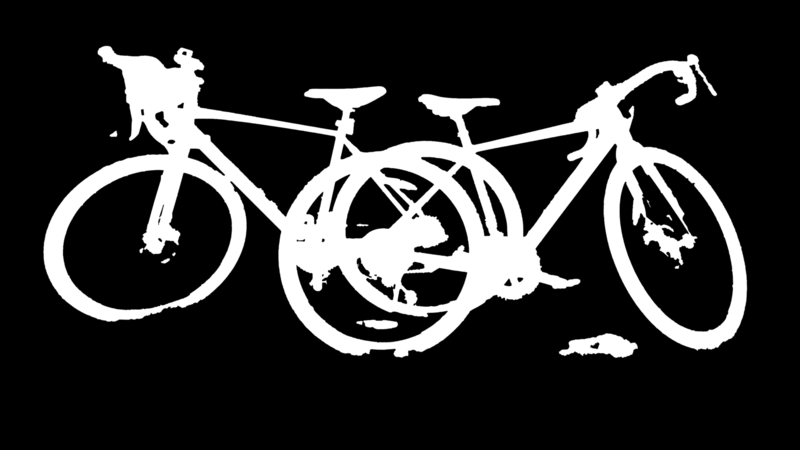

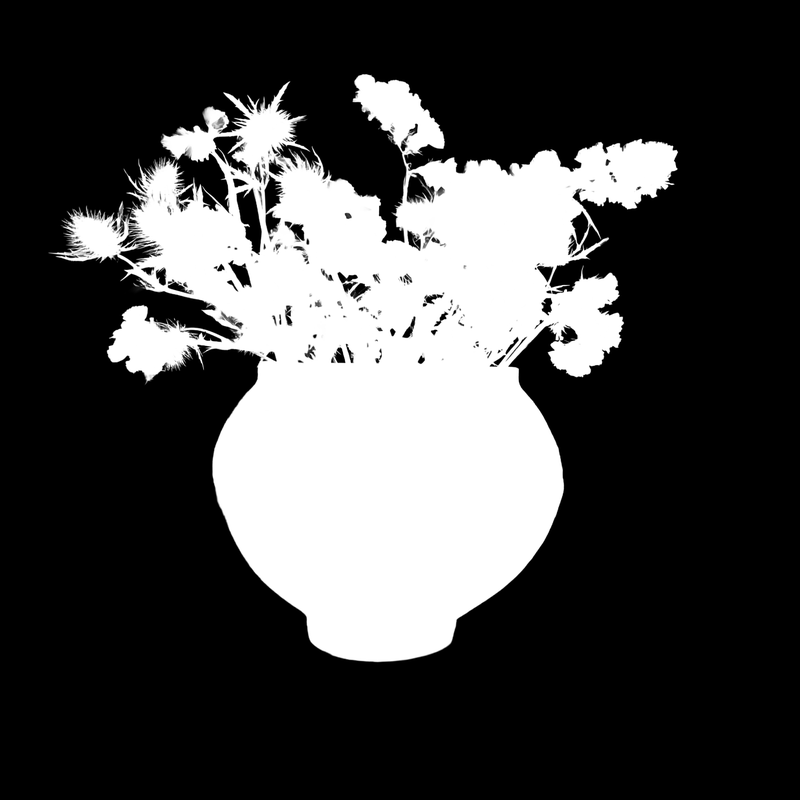

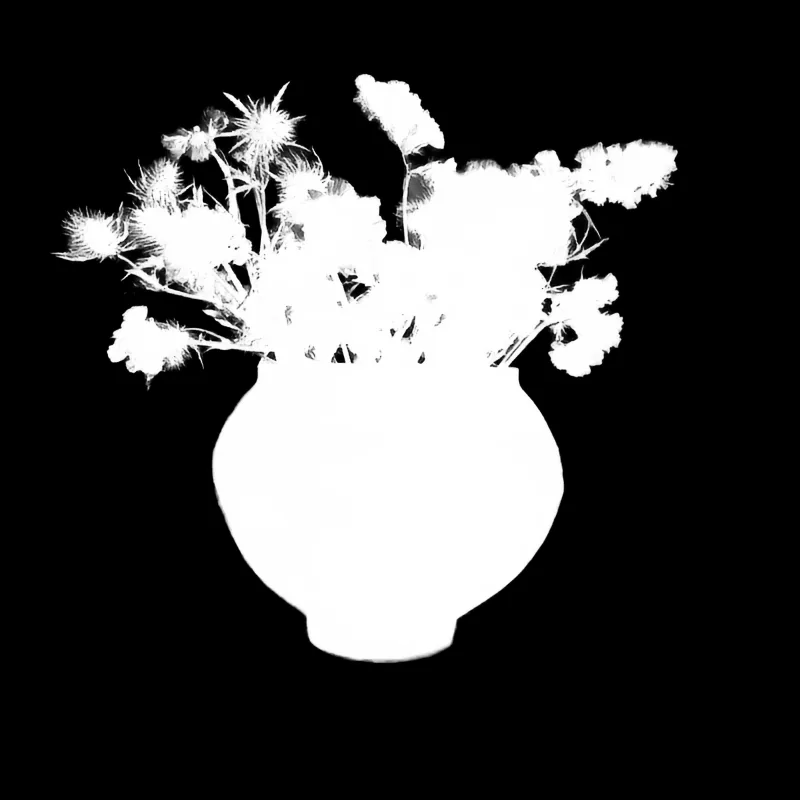

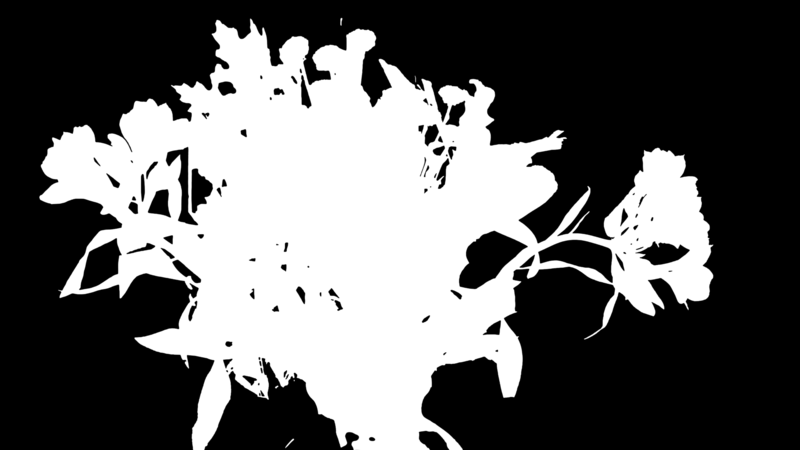

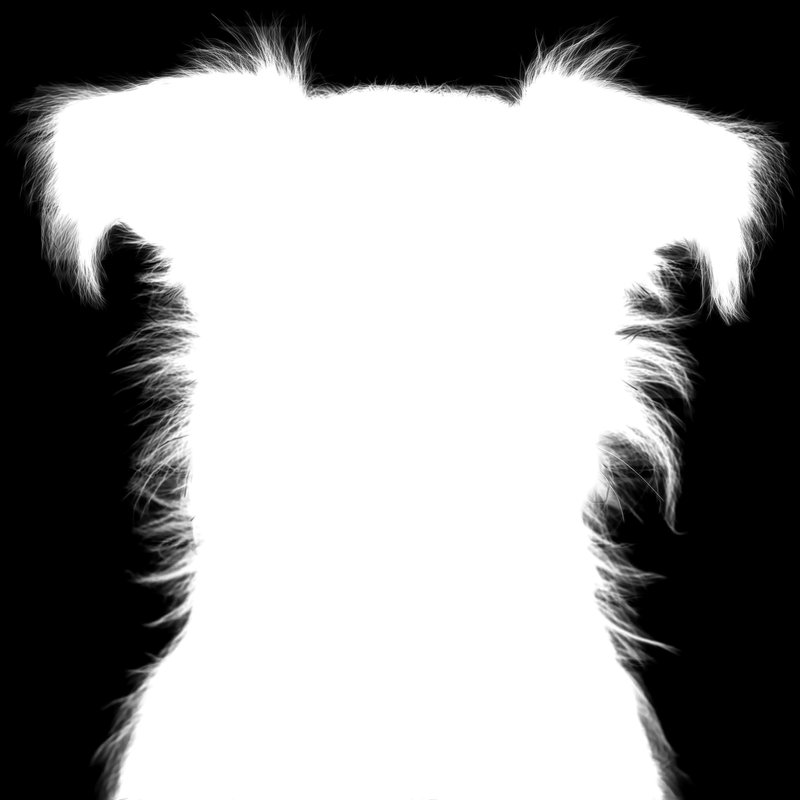

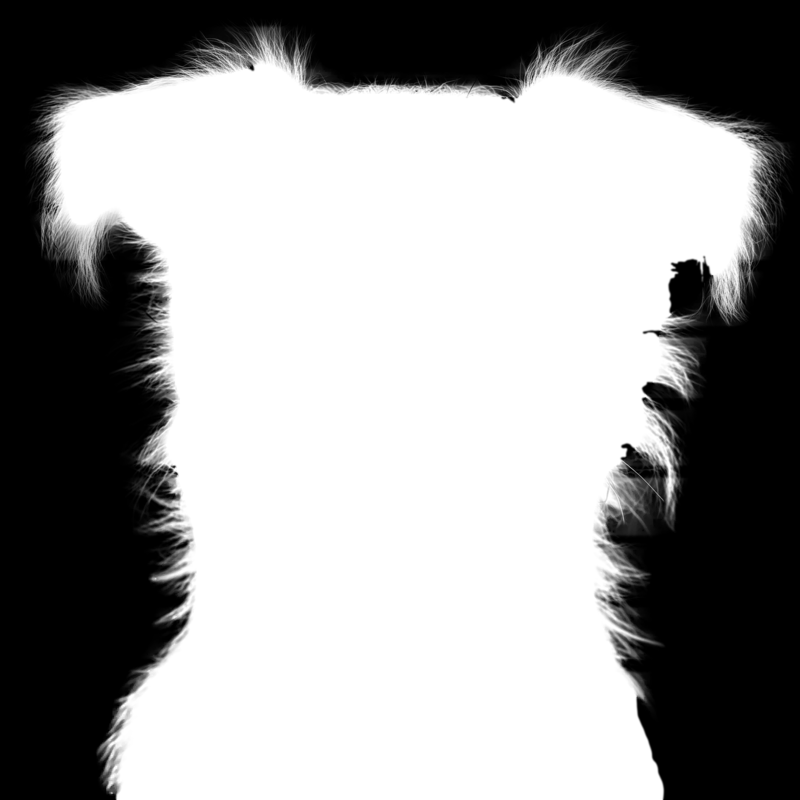

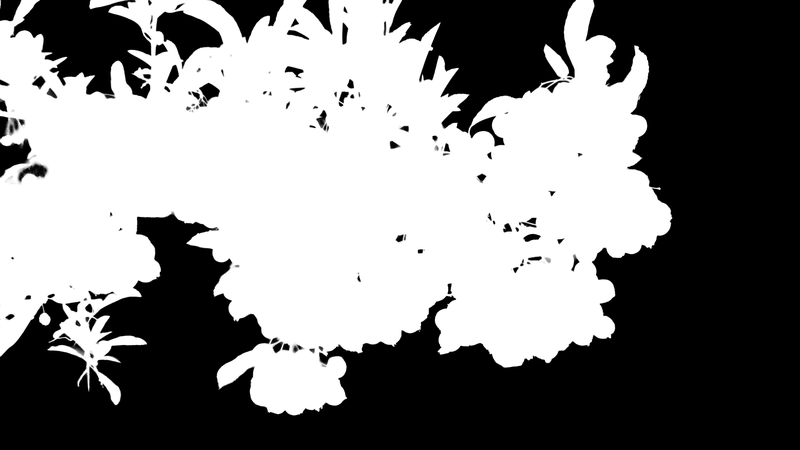

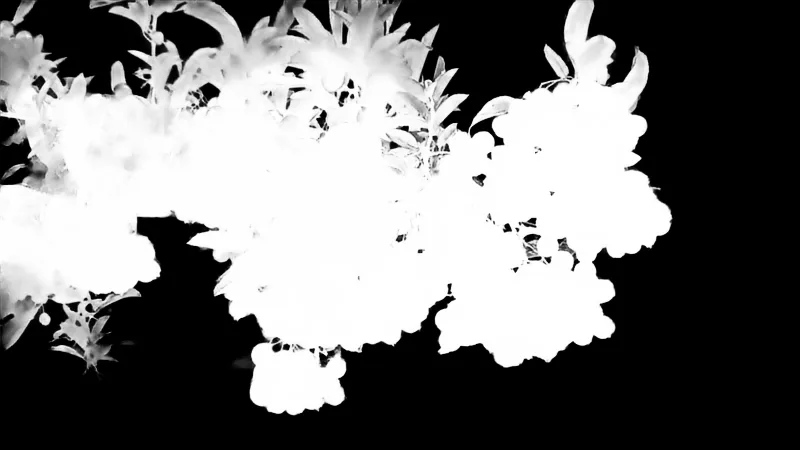

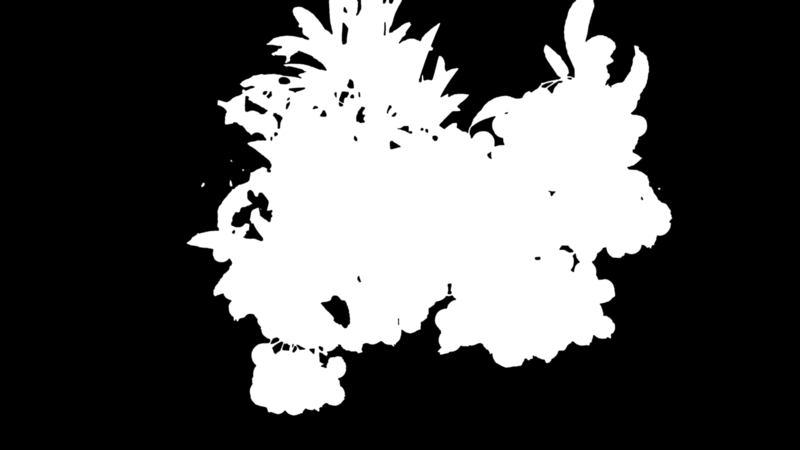

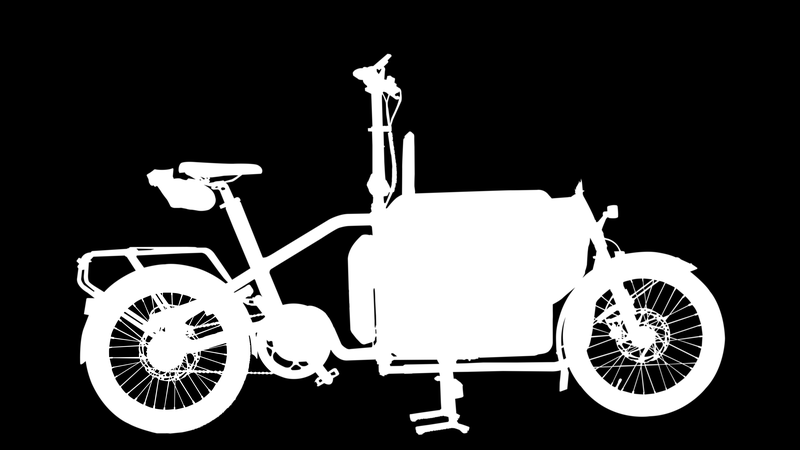

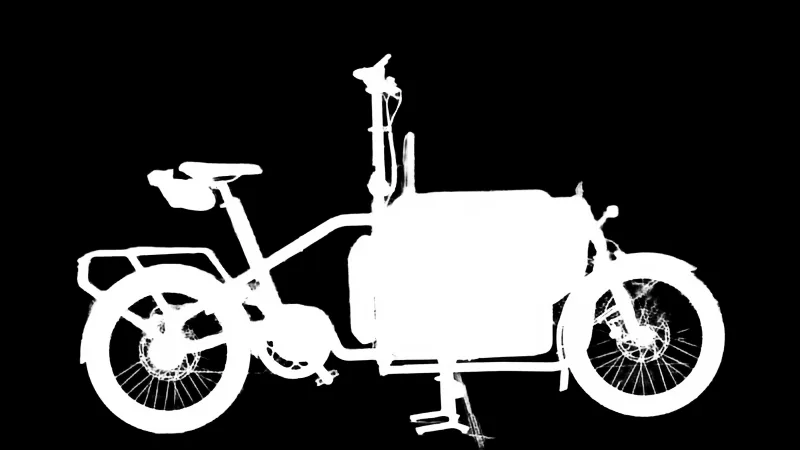

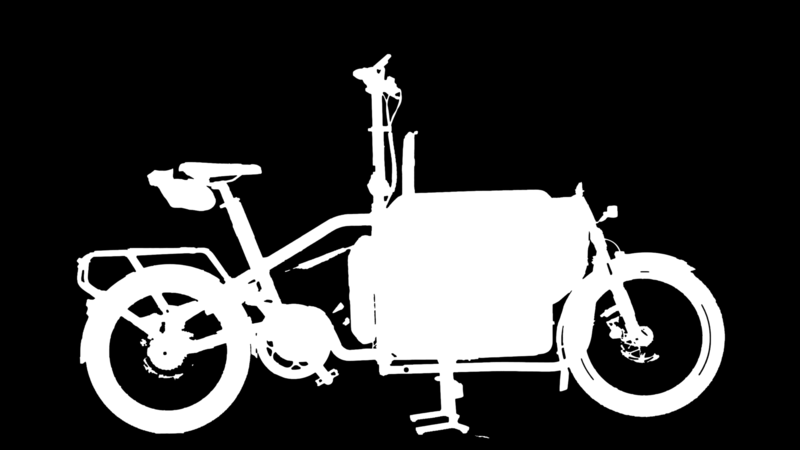

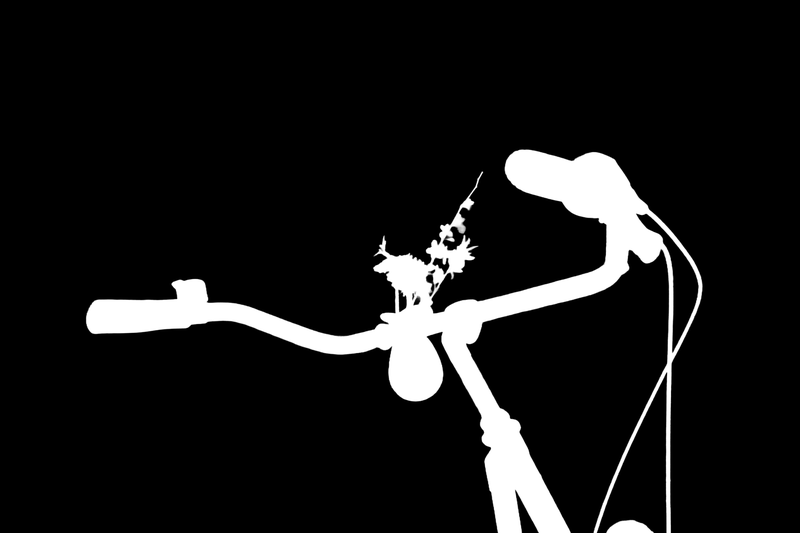

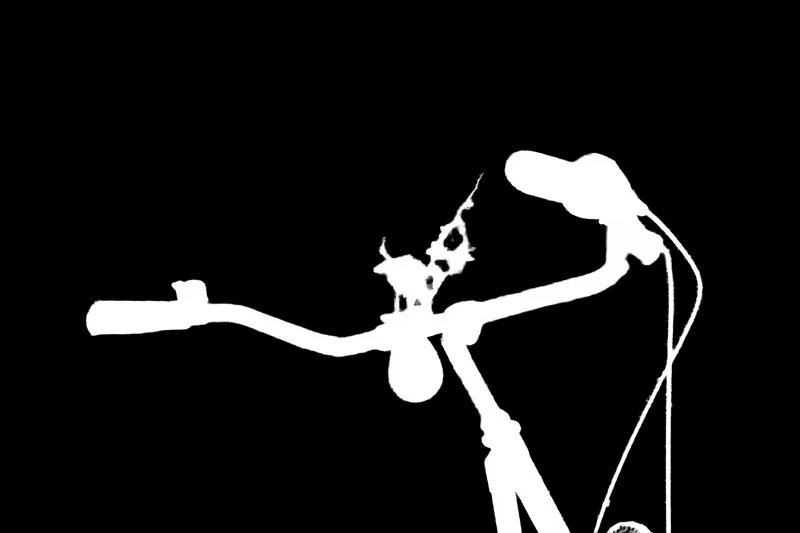

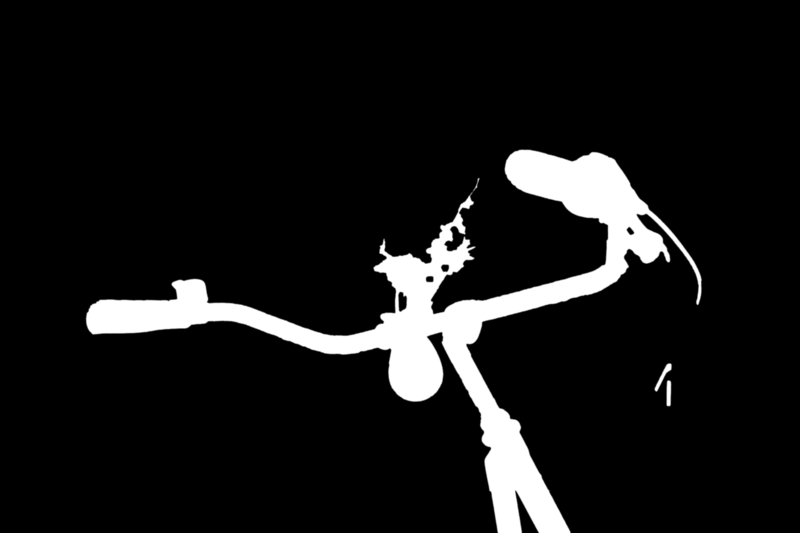

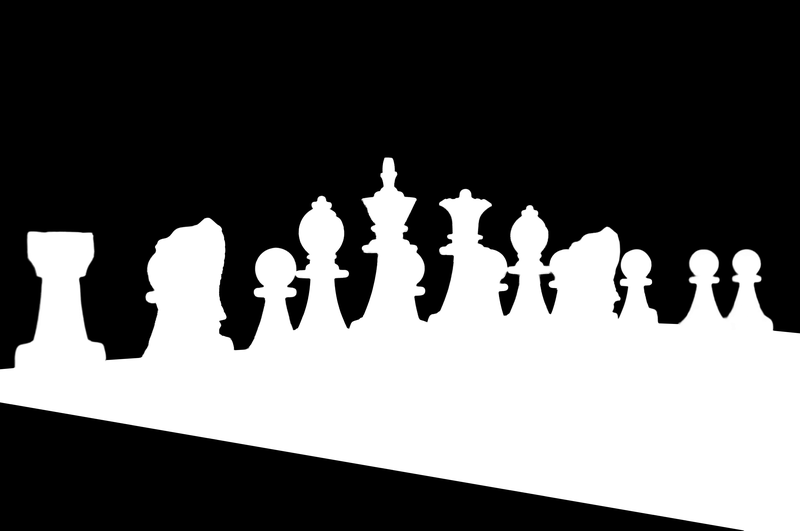

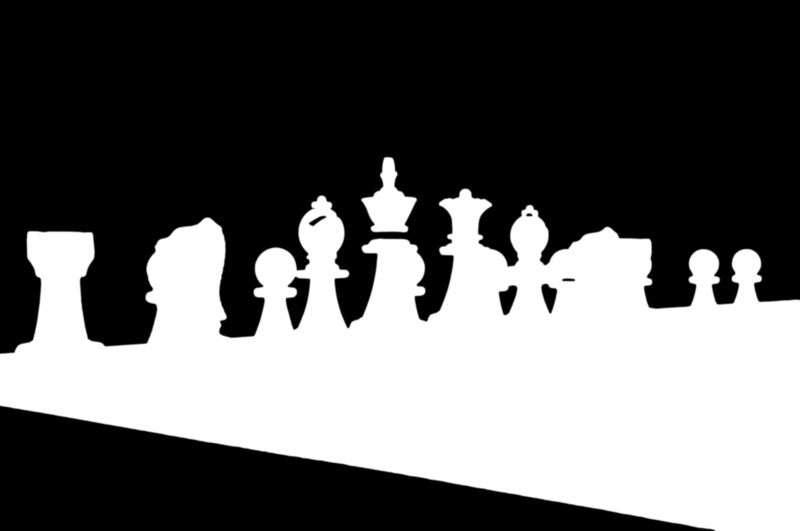

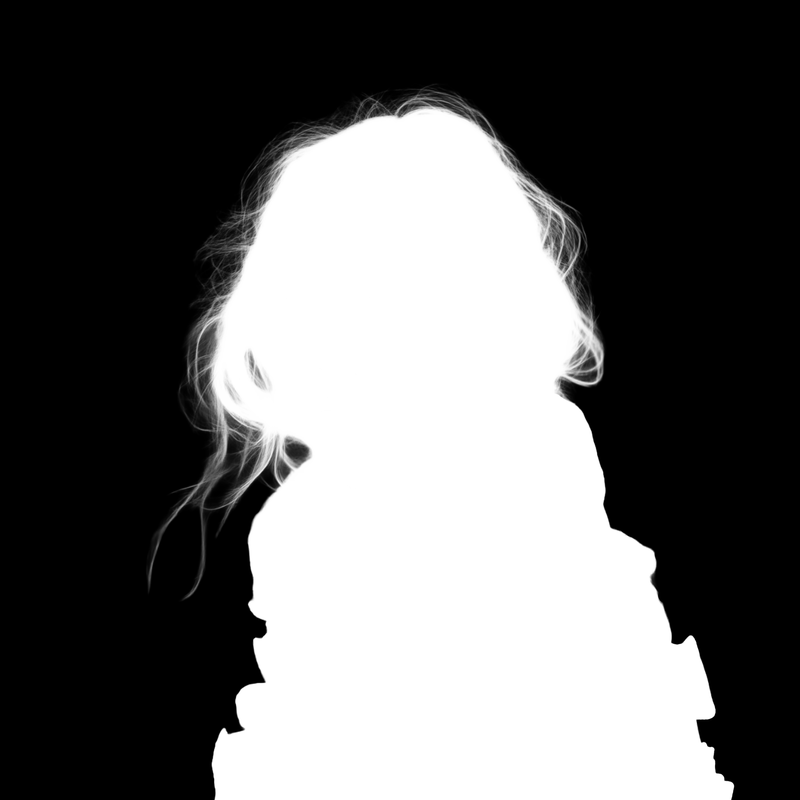

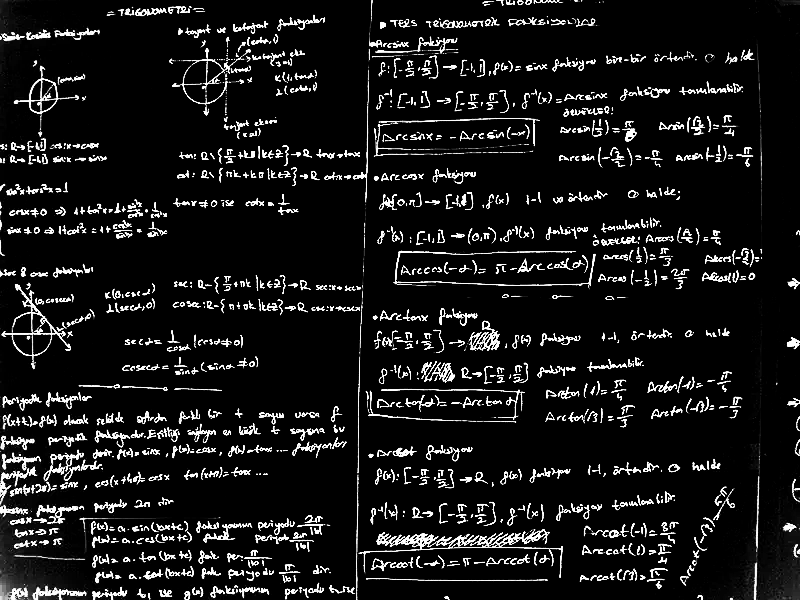

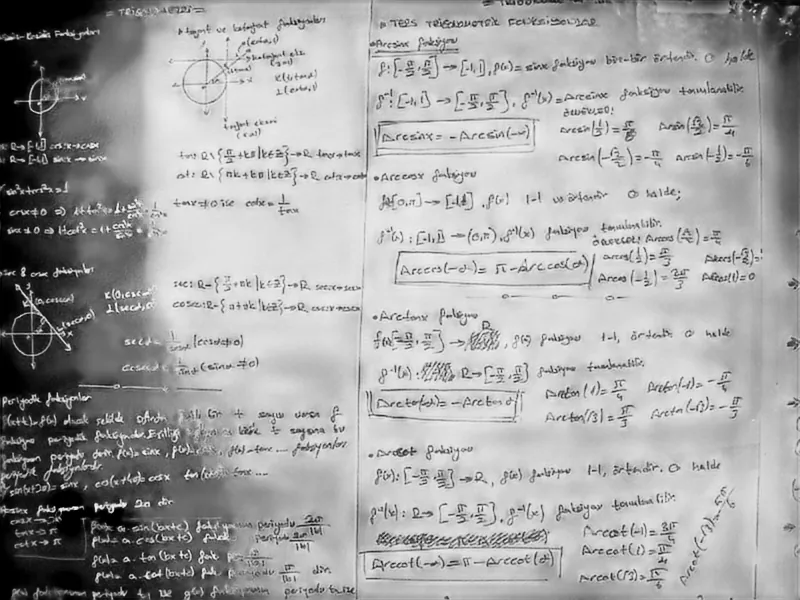

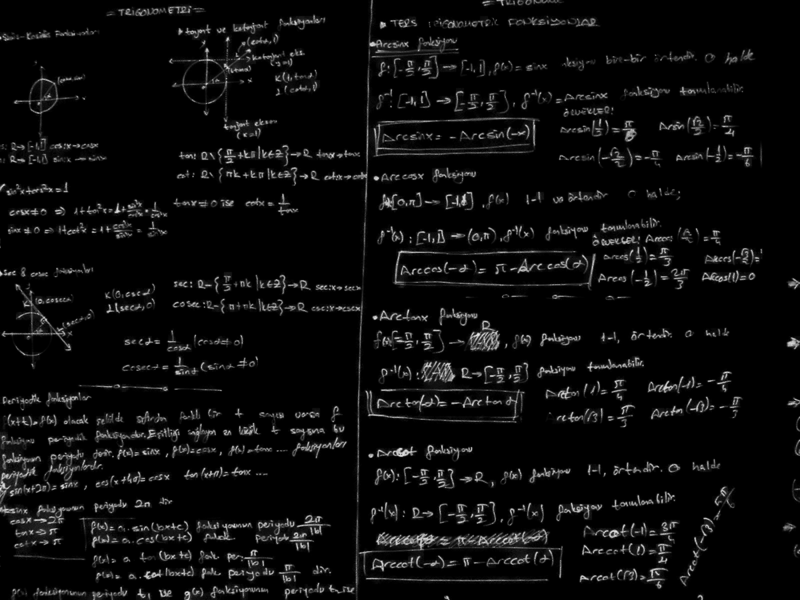

Each comparison shows four images side by side: the original image, ground truth alpha matte, Focus model output, and Clipping Magic output. Error metrics are displayed below each model output to quantify accuracy.

Reading the Comparisons

Each comparison shows four images in sequence:

- Original Image: The input image

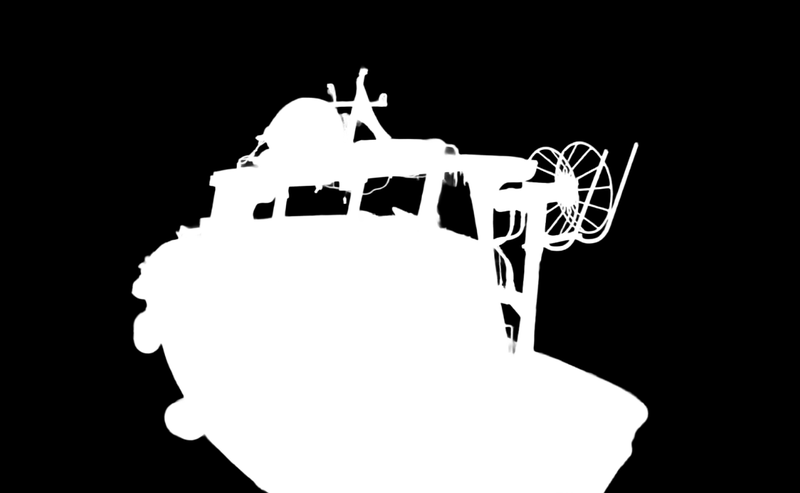

- Ground Truth: Reference alpha matte (manually created or high-quality benchmark)

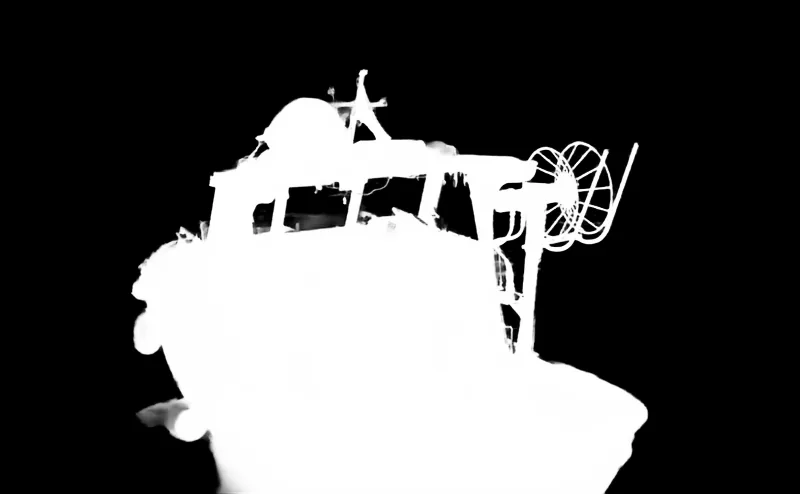

- Focus: Open source model output with error metrics

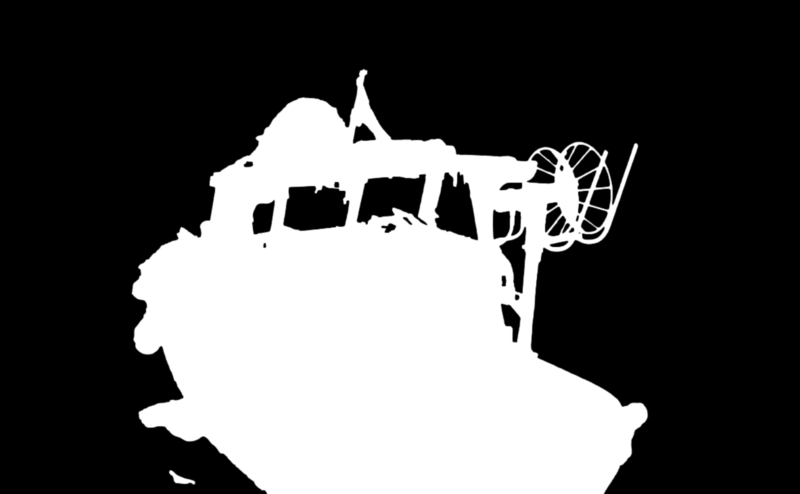

- Clipping Magic: Commercial tool output with error metrics

Alpha mattes are grayscale images where white represents foreground (subject) and black represents background. Gray values represent semi-transparent edges.

Error Metrics Explained

- MAE (Mean Absolute Error): Average pixel-level difference from ground truth. Lower is better. Measures overall accuracy.

- MGE (Mean Gradient Error): Average edge sharpness difference from ground truth. Lower is better. Measures edge quality.

- Winner Indicator (✓): Green checkmark shows which model achieved lower error for each metric

- Interpretation: Results vary by image type. Some images favor one model, others favor the other.

Focus Model

- Open source (Apache 2.0 license)

- Runs locally for ultimate privacy

- Free to use and modify

- No API dependencies or internet required

- Available via Python package, Docker, and source code

Clipping Magic

- Commercial web service

- Subscription-based pricing model

- Browser-based interface with manual editing tools

- Requires internet connection and account

- Established tool with years of development

Win Count Summary#

Overall Performance (Lower error is better)

| Metric | Focus Wins | Clipping Magic Wins |

|---|---|---|

| MAE (Mean Absolute Error) | 24 | 15 |

| MGE (Mean Gradient Error) | 19 | 20 |

Focus wins more frequently on overall pixel accuracy (MAE), demonstrating better performance on general background removal across the test dataset. Clipping Magic shows a slight edge in edge sharpness (MGE), indicating comparable performance in preserving fine edge details.

Visual Comparisons with Error Metrics#

These comparisons demonstrate real-world performance across diverse image types. Both successful results and challenging cases are shown. Error metrics provide objective quality measurements for each comparison.

About the Test Dataset

- Test set only: All images are from the test set, not used in training or validation of any model shown here.

- Fair comparison: Human portraits are Midjourney-generated to ensure they could not have appeared in the training datasets of any background removal tool, guaranteeing a fair comparison.

- Real-world variety: Non-portrait images are personal photographs spanning 20 years, including medium format film, 35mm film, early digital camera (2.1 megapixel), various smartphones, and professional full-frame camera with prime lenses.

- No cherry-picking: Images were not selected to favor our model. Both successful results and challenging cases are shown.

Technical Specifications#

| Feature | Focus (v0.2.0) | Clipping Magic |

|---|---|---|

| Status | Active (Open Source) | Active (Commercial) |

| License | Apache 2.0 (Open Source) | Proprietary |

| Deployment | Local (CPU/GPU) | Web service (cloud-based) |

| Privacy | Images never leave your computer | Server-side processing |

| Pricing | Free (unlimited local use) | Subscription-based |

| Integration | Python package, Docker, CLI | Web interface, API |

| Manual Editing | Not included (automatic only) | Built-in editing tools |

| Internet Required | No | Yes |

| Quality | Excellent (see metrics above) | Excellent (see metrics above) |

| Best For | Privacy-sensitive apps, automation, local processing | Manual refinement, web workflows |

Installation & Usage#

Focus is available through multiple installation methods for local use.

Focus (Open Source)

Run instantly with Docker (uses Focus by default)

docker run -p 80:80 withoutbg/app:latestPython Package

Install via pip for Python integration

pip install withoutbgSee full Python SDK documentation for integration details.

Clipping Magic

Clipping Magic is a commercial web service. Visit their website for pricing and access.

Quality Analysis Summary#

Both Focus and Clipping Magic deliver excellent quality across most image types. The error metrics show that performance varies depending on the specific characteristics of each image:

- Complex hair and fine details: Results vary by image, with each tool excelling in different cases

- Motion blur: Both tools handle motion blur effectively, with comparable accuracy

- Challenging lighting: Performance depends on specific lighting conditions in each image

- Overall accuracy: Error metrics show competitive performance across the full dataset

The choice between Focus and Clipping Magic depends on your specific requirements: privacy and local processing (Focus) versus manual editing tools and web-based workflow (Clipping Magic).

Related Resources#

- Focus Model Results - Quality examples from the open source Focus model

- Focus vs Pro - Compare open source Focus with our premium Pro API model

- Snap vs Focus - Compare older Snap model with current Focus model

- API Documentation - Complete guide to using our Pro model via API

- Python SDK - Python package for Focus open source model